Key Takeaways

- Real-time simulation strengthens evidence with deterministic timing, low jitter, and reproducible I/O, which supports credible validation in academic settings.

- Hardware-in-the-loop simulation cuts risk and cost by exercising controllers under realistic faults, start-up conditions, and edge cases before full-power tests.

- A staged workflow from modeling to HIL to power lab emulation speeds iteration, improves safety, and keeps results consistent across semesters.

- Clear timing targets, careful model partitioning, and scripted verification routines form the backbone of a dependable research pipeline.

- OPAL-RT provides precise timing, open toolchain compatibility, and support resources that map to coursework, theses, and funded research needs.

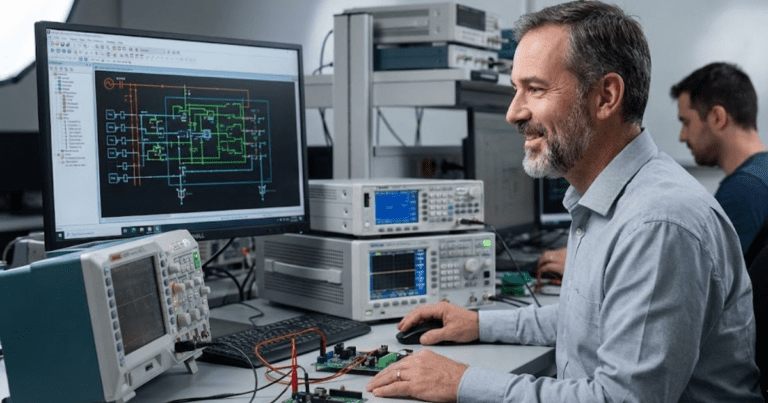

Real-time simulation lets you test electrical ideas at the speed they run. You see cause and effect without waiting for batch results, and you keep safety on your side. That mix of timing, fidelity, and repeatability gives researchers confidence to push new control strategies. Time saved in the lab often turns into better papers, tighter prototypes, and stronger evidence.

You may be building a converter, validating a microgrid controller, or proving a protection concept. The hurdle is always the same, which is turning a model into convincing, testable behavior. Real-time methods bridge that gap with deterministic execution and hardware I/O that feels like a bench setup. The result is a workflow that supports bold ideas, careful checks, and clear outcomes.

“Hardware-in-the-loop simulation reduces risk, raises fidelity, and supports steady progress.”

Understanding real-time simulation in electrical research

Real-time simulation means a model executes within a fixed time step that matches wall‑clock time. Each task completes before the next tick, which preserves causality, prevents overrun, and keeps signals aligned. The simulator interacts with external devices through analog, digital, and communication I/O, so controllers see authentic waveforms. That closed loop lets you study transients, faults, and controls with timing that mirrors a lab setup.

For electrical research, this approach faces strict requirements for latency, jitter, and numerical stability. Power devices, converters, and protection logic react on microsecond to millisecond scales, so the platform must compute fast and predictably. Partitioning heavy models, selecting suitable solvers, and using FPGA resources when needed help meet those goals. The pay-off is transparent behavior that you can trust during controller development, validation, and teaching.

Why real-time simulation is essential for modern academic validation

Timely evidence matters when experiments must show safe operation, stable control, and repeatable fault response. Real-time execution gives you deterministic timing, which strengthens claims about stability margins and controller robustness. Research teams gain a controllable setting to compare designs across the same disturbances and setpoints. Funding proposals, theses, and journal submissions benefit from data that holds up under scrutiny.

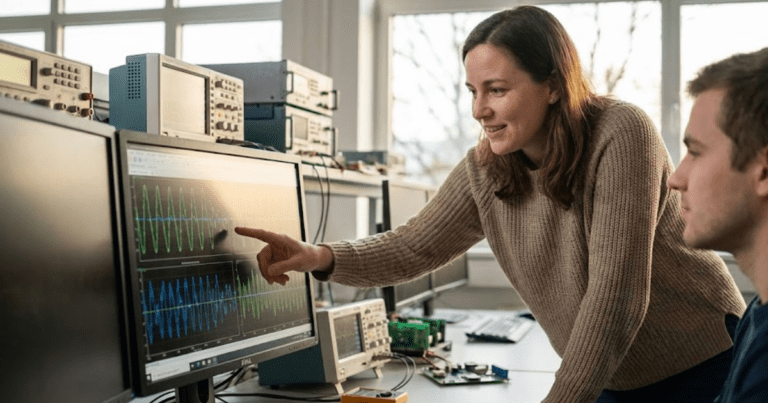

Reproduce fast control behavior with confidence

High-speed control loops depend on precise timing, not just average step size. Real-time simulation preserves loop delays, sampling, and quantization, so phase margins and gain limits reflect actual conditions. That precision supports studies of PWM strategies, observer bandwidths, and saturation handling without guessing about timing artifacts. You get clear insight into where a controller succeeds and where it needs adjustment.

A consistent time base also keeps multi-rate systems honest. Current loops, voltage loops, and supervisory logic can run at their intended rates without slippage. Investigations of limit cycles, dead-time effects, and anti-windup schemes become more credible. Reviewers can follow the timing chain from sensor input to actuator output, which strengthens your argument.

De-risk lab prototypes before power-up

Power mistakes are costly, and schedule slips are even harder to recover. Real-time simulation lets you push a controller through start-up, fault, and shut-down sequences while watching every intermediate state. You can verify soft-start slopes, current limits, and protection thresholds before cables ever touch a power rack. That reduces equipment stress, lab time, and safety concerns.

Structured test scripts amplify the benefit. You can replay waveforms from earlier cases, insert realistic sensor noise, and vary latencies to map the safe region. Edge cases like brownouts or unsynchronised breakers can be explored without damaging hardware. When the lab day arrives, you arrive with settings that already meet requirements.

Generate trustworthy evidence for peer review

Strong claims require reproducible tests with well-defined timing. Real-time platforms let you publish exact step sizes, I/O latencies, and solver choices, which support independent reproduction. The ability to export test profiles and data logs makes result sharing straightforward. That level of detail reduces ambiguity and clarifies how conclusions were reached.

Repeatability across semesters matters in academic settings. New students can rerun the same scenarios, compare controller variants, and extend the study in clear ways. Shared projects benefit from labeled datasets, consistent triggers, and identical fault injections. Supervisors gain confidence that improvements reflect design changes, not test drift.

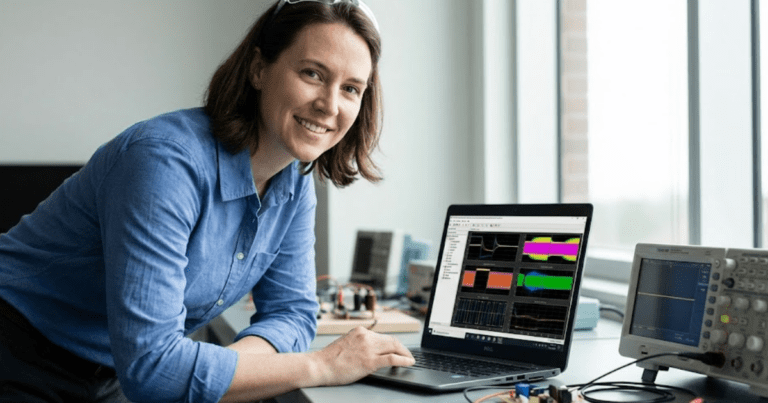

Shorten iteration loops across student teams

Projects move faster when test cycles match the cadence of coursework and grant milestones. Real-time simulation reduces the wait time between design, test, and analysis, enabling more frequent feedback. Students get immediate clarity on parameter choices and code structure, accelerating learning. Faculty see quicker convergence on a solid design.

Short iterations also improve team handoffs. Control code, plant models, and test profiles pass cleanly between contributors with fewer surprises. A common platform reduces rework when students graduate or join midstream. Outcomes feel cumulative, and the lab grows its capability with each project.

To sum up, real-time execution strengthens claims, reduces lab risk, and keeps projects moving. The approach aligns with academic needs for clarity, safety, and repeatability. Students learn faster when tests give quick, trustworthy feedback. Supervisors gain cleaner evidence, better scheduling, and fewer rebuilds.

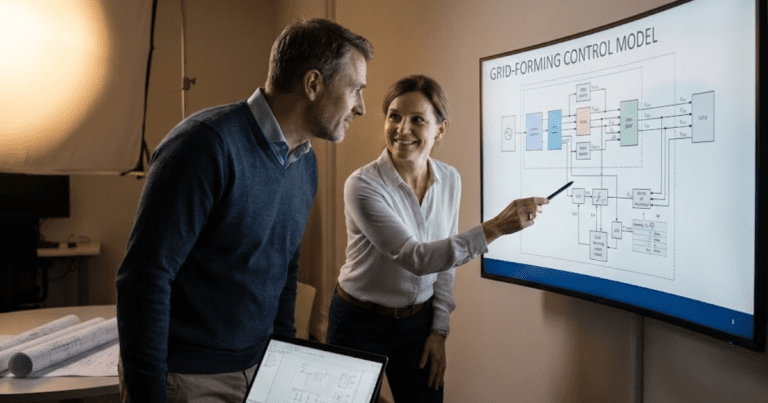

How electrical simulation connects to hardware in the research process

Electrical simulation moves beyond screen-only testing when you link models to I/O, sensors, and controllers. The moment you exchange voltages, currents, and messages with physical devices, the experiment starts to reflect bench conditions. This link can start early with simple signal conditioning, then grow to full controller checkout. Each stage adds confidence while keeping power risk contained.

A practical flow uses staged coupling. You begin with plant models and a software controller, then connect a physical controller to a simulator that presents realistic waveforms. Later, you add power amplifiers or reduced-scale rigs while keeping the same test scripts. That progression supports safe learning, controlled risk, and clear performance metrics for electrical simulation.

“Real-time simulation lets you test electrical ideas at the speed they run.”

| Stage | Research goal | Model scope | Hardware linkage | Typical metrics |

| Concept and modeling | Prove basic behavior and stability | Reduced-order plant, ideal switches | None, software only | Step response, eigenvalues, loop gains |

| Controller design | Tune loops and logic | Detailed plant, quantized sensors | I/O cards to controller, no power stage | Latency, jitter, tracking error |

| HIL checkout | Verify closed-loop under faults | Full plant, non-ideal effects | Controller to the simulator via analog and digital | Trip time, ride-through, protection limits |

| Power lab emulation | Exercise converter dynamics safely | Realistic network and loads | Low-voltage power interface to the simulator | Thermal margins, switching stress |

| Field or hardware test | Confirm settings on physical setup | Minimal model, focus on edge cases | Controller and power hardware | Efficiency, EMI bounds, reliability stats |

Practical examples of real-time simulation in academic power systems

Credible examples help you see where the method shines and where it saves time. Real-time execution supports control, protection, and grid studies without putting equipment at risk. The format also suits thesis timelines, course projects, and multi-year platforms. Students can build confidence while tackling problems that matter to faculty and sponsors.

- Microgrid black start and reconnection: Use a simulator to feed breaker status, frequency, and voltage to a controller during start-up. Confirm synchronization checks, soft load pickup, and safe reconnection after islanding, then compare profiles across strategies.

- Converter current-limit behavior: Drive a physical controller with realistic inductance, resistance, and back‑emf while stepping load. Verify current-limit transitions, thermal headroom, and recovery without stressing a power rack.

- Protection relay coordination: Inject faults at varied locations with changing source impedance. Measure clearing times, verify selectivity, and adjust settings while keeping the same disturbance scripts.

- Wide-area control with time alignment: Stream phasor-like measurements with controlled latency and jitter. Study controller resilience to packet delay, loss, and clock offsets, and quantify margins.

- Inverter-based resource ride-through: Emulate weak grid conditions and voltage dips under different short-circuit ratios. Confirm ride-through logic, current limiting, and voltage support without damaging prototypes.

- Cyber-physical intrusion checks: Simulate sensor tampering and command spoofing while monitoring controller defenses. Record detection latencies, fallbacks, and recovery paths in a safe setting.

These examples cut risk while revealing subtle timing effects that static tests miss. Students gain practical skills, and supervisors collect cleaner data. Reuse of scripts and datasets shortens future studies. Funding bodies see a repeatable method that delivers credible outcomes.

Key benefits of hardware in the loop simulation for researchers

Research labs face tight schedules, safety limits, and the need to compare designs under fair conditions. Hardware-in-the-loop simulation addresses those pressures with closed-loop tests that respect timing and I/O fidelity. You study behavior that depends on sampling, quantization, and interrupts, not just average dynamics. The method supports careful exploration, faster tuning, and safer ramp-up.

Test controllers against realistic faults

Controllers behave differently when sensors saturate, converters clip, or lines trip. Hardware-in-the-loop simulation lets you trigger those events while preserving timing, ordering, and measurement scaling. You can evaluate protection thresholds, fallback states, and restart logic without putting people or equipment at risk. That insight guides parameter choices and code structure.

Fault libraries improve repeatability and coverage. You can vary depth, duration, and location while keeping all other conditions fixed. Students compare designs using exactly the same disturbances, which supports fair conclusions. Supervisors collect data that maps performance across a wide range of conditions.

Achieve high fidelity without full-power rigs

Not every lab has space or budget for high-power benches. Closed-loop tests with accurate plant models let you observe converter dynamics, delay chains, and sensor behavior at low power. The controller sees voltages and currents that look authentic, even when the actual load is small. You reserve heavy hardware for final confirmation.

Fidelity comes from careful solver choices and model partitioning. Fast subcircuits can move to FPGA resources, while slower parts run on CPUs. That mix maintains short time steps where they matter most. Results feel close to lab measurements, with a fraction of the overhead.

Scale experiments across semester timelines

Course projects and theses benefit from short test cycles. HIL benches start quickly, run reliably, and support long test queues without re-wiring the lab. Students spend more time studying behavior, and less time waiting for hardware. That keeps learning on track and supports tight submission dates.

Shared rigs turn into platforms that persist across cohorts. Scenarios, datasets, and configuration files carry over with minimal drift. Teams can extend prior work confidently because the test bed stays consistent. The lab grows its catalog of verified cases and reference controllers.

Collect richer data with closed-loop stress tests

A closed loop reveals how software and hardware interact under stress. You can sweep parameters, insert noise, and log every signal with the same time base. That makes post-processing cleaner, and comparisons stronger. Hypotheses are easier to confirm or refute.

Structured logging supports review and teaching. Students can annotate runs, export summaries, and attach plots to lab notes. Faculty can trace root causes through time-aligned channels. Future projects benefit from a growing library of labelled results.

In short, hardware-in-the-loop simulation reduces risk, raises fidelity, and supports steady progress. Labs gain flexible benches that students can run confidently. Supervisors receive data that answers the right questions. Budgets stretch further, and lab time is used well.

Steps to integrate real-time simulation platforms into your research workflow

Successful adoption starts with clear aims, timing targets, and a plan for I/O. Teams that focus on partitioning, interfaces, and repeatable tests get value early. You can start small, then add features as projects expand. A measured path also helps students learn the method without steep hurdles.

Define research outcomes and timing constraints

State the claims you want to support, and the numbers that must appear in results. That may include step size, maximum jitter, and acceptable closed-loop delay. List the faults, setpoints, and operating modes that must be exercised. Specify what data proves each claim.

Timing targets guide platform choices and model scope. Fast converters might require microsecond steps, while network studies tolerate longer intervals. Record these bounds early to avoid rework later. With targets in hand, your team can make consistent decisions.

Select models and partition for real-time

Choose plant detail that supports conclusions without wasting cycles. Move fast-switching parts or protection elements into smaller, optimized submodels. Keep slower supervisory logic on the CPU side to save resources. Validate each piece alone before composing the full system.

Partitioning also simplifies tuning and debugging. You can swap implementations for sensitive parts without touching the rest. Clear interfaces promote reuse across projects, grants, and theses. Over time, a curated library of blocks forms a reliable base.

Configure I/O and protocol interfaces

List the signals that must cross the simulator boundary, then match them to analog, digital, PWM, or serial resources. Pay attention to scaling, sampling, and filtering on both sides of each interface. Verify that cable runs, isolation, and grounding meet lab rules. Confirm that timestamps and triggers align as planned.

Early bench checks prevent noisy data and misinterpreted delays. A short session with an oscilloscope can reveal offset, skew, and clipping. Treat this as a calibration step, not a last-minute fix. Once I/O is solid, the rest of the setup acts predictably.

Establish verification and calibration routines

Agree on reference cases with known outcomes before running large studies. Use those cases to confirm solver choice, step size, and I/O timing. Automate pass or fail checks where possible, then store logs for later audits. Keep configuration under version control to track changes.

Calibration should include sensor models and actuator limits. Validate limits on rate, saturation, and quantization so controllers do not face surprises. Update the reference suite when the model evolves or the controller gains features. This habit preserves trust across semesters.

Train users and maintain a reproducible pipeline

Students and staff need quick starts, simple playbooks, and clear patterns for adding features. Provide project templates, naming rules, and data export scripts. Short workshops that pair hands-on time with review of timing goals work well. Peer coaching keeps skills growing throughout the year.

Reproducibility relies on scripts over manual clicking. Use batch runs for sweeps, and capture system info with each log. Store test assets where new team members can find them easily. A predictable pipeline reduces ramp-up time and lab bottlenecks.

A compact overview helps align roles and outputs.

| Step | Lead role | Primary outputs | Key tools or standards |

| Define outcomes and constraints | Principal investigator | Timing targets, test scenarios, success criteria | Requirements template, timing log |

| Partition models for real-time | Simulation engineer | CPU and FPGA split, validated submodels | Solver checklist, unit tests |

| Configure I/O and safety | Lab manager | Channel maps, scaling, isolation checks | I/O map, safety checklist |

| Verify and calibrate | Graduate researcher | Reference cases, pass or fail rules, baselines | Automated scripts, data sheets |

| Train and operate | Teaching assistant | Playbooks, templates, run logs, storage layout | Version control, data pipeline |

These steps keep scope clear, timing honest, and results reproducible. Small wins appear early, then larger wins follow as the bench matures. Students learn faster because the process feels consistent. Supervisors gain reliable data without extra rework.

Future opportunities for electrical researchers using real-time simulation

New computing approaches, fresh teaching models, and expanding datasets are opening useful directions. Real-time methods sit at the centre of many of these paths, pairing timing precision with scalable workflows. Labs can extend reach without buying every power asset outright. Students gain skills that map cleanly to industry practices.

- Hybrid CPU and FPGA co-simulation: Move the highest-speed pieces into programmable logic while keeping larger models on processors. This mix supports microsecond steps for converters and broader grids at sensible rates.

- Cloud-based remote benches: Share access to rigs across campuses with queueing, storage, and role-based permissions. Students run tests from home while lab staff maintain hardware safety.

- AI-assisted tuning and diagnosis: Train models on labeled logs to suggest parameters, detect anomalies, and flag drift. Researchers focus on interpretation while routine fits happen quickly.

- Digital twins of lab assets: Maintain up-to-date models of power racks, cables, and sensors that sit beside the physical setup. Planned upgrades and maintenance benefit from rehearsed scenarios.

- Low-inertia grid studies at scale: Study converter-dominated networks with realistic delays, measurement noise, and protection interactions. Findings support clearer guidance on settings, limits, and coordination.

- Standards-focused regression testing: Script conformance checks that run nightly on benches, then store signed results. Grant reviewers and partners see steady progress with traceable proof.

These opportunities build on skills many labs already hold. The step from good practice to great results often lies in repeatability, not raw power. Careful investment in scripts and training pays dividends. Students leave with strong habits, and labs keep momentum.

Real-time simulation runs a plant model on hardware that completes each time step before the next tick of the clock. The simulator exchanges I/O with external devices so a controller experiences realistic waveforms and delays. This approach preserves sampling, quantization, and interrupt effects that shape control performance. Researchers get closed-loop behavior that mirrors a lab setup while keeping risk contained.

Teams define timing targets, then connect controllers to a simulator that emulates the plant and grid. They run scripted scenarios that include faults, load steps, and setpoint changes while logging every signal. Results are compared against pass or fail rules, with special attention to trip times, stability margins, and recovery paths. Findings are repeatable because timing, I/O, and scenarios remain fixed across runs.

Hardware-in-the-loop connects a physical controller to a simulated plant, so tests feel like a bench session without full-power rigs. Candidates can probe start-up, protection, and fault cases early, then refine code with fast feedback. Time saved in setup shifts into deeper analysis, better ablations, and stronger evidence. The same bench supports paper figures, thesis chapters, and later follow-up studies.

A common setup includes a real-time target with analog and digital I/O, isolation, and safe connectors. Labs add PWM capture, encoder inputs, and communication channels when needed for drives or converters. Power interfaces range from signal-only to low-voltage amplifiers, depending on scope and safety rules. Storage, scripts, and version control complete the pipeline for reproducible runs.

Students learn best by starting with clear timing goals, simple models, and short scripted tests. Early exercises focus on measuring latency, jitter, and scaling across I/O paths. Later projects add faults, parameter sweeps, and detailed logging with consistent triggers. A steady progression builds confidence, solid habits, and reliable results.