Key Takeaways

- Clear multi-domain models give engineers, educators, and students a reliable way to see how electrical, mechanical, and control behaviour interact, instead of guessing from isolated single domain views.

- System representation gains strength when models follow shared conventions for naming, structure, units, and documentation, so teams can read, review, and reuse each other’s work with confidence.

- Reliable models for component interaction studies rely on verified parameters, stable numerical behaviour, and transparent assumptions, all anchored in physics that match the system under study.

- Consistent preparation steps, such as defined objectives, scoped test cases, calibrated submodels, and frozen configurations, reduce variability in results and support reproducible testing across courses and projects.

- Model clarity directly improves debugging and learning, because users can trace signals, understand interfaces, and connect simulations to theory, which strengthens engineering judgment and supports safer system decisions.

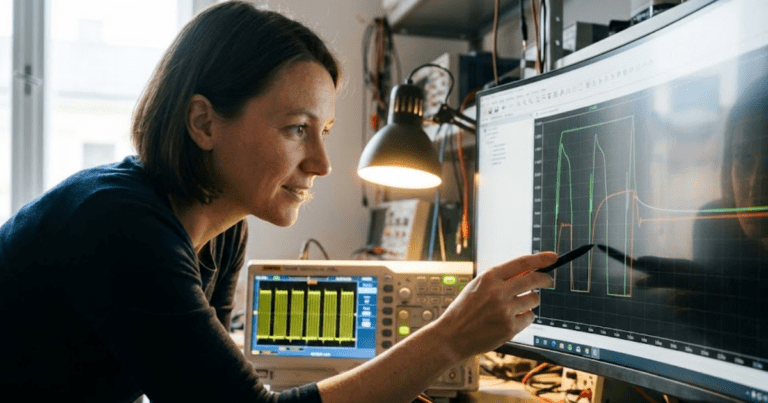

Reliable multi domain models can feel like the difference between guessing and actually seeing how your system behaves. For power systems and power electronics engineers, confidence in a model is tied directly to how clearly it represents the physics that matter. When components span electrical, mechanical, control, and communication domains, small shortcuts in modelling often grow into confusing test results and long nights in the lab. Careful attention to model clarity helps your team move from debugging the model itself to learning from the behaviour it reveals.

Clear system representation is not just an aesthetic preference for tidy diagrams. It directly affects how quickly you can answer questions about stability, protection margins, and converter behaviour under stressed conditions. For educators and researchers, the way a model is structured affects how students understand cause and effect in complex systems. For technical leaders, consistent modelling practices create test results that can be shared, repeated, and trusted across projects and teams.

Why engineers rely on clear multi domain models for testing

Multi domain models sit at the centre of how you study power systems, converters, and control logic before hardware exists or before you touch a live feeder. A clear model gives you the confidence that when a protection relay trips, a converter saturates, or a voltage sag propagates, the behaviour you see reflects physics and not modelling artefacts. You are able to ask precise questions about operating points, contingencies, and controller settings because the structure of the model mirrors the structure of the system. That connection between the model and the physical system is what turns simulation from a “nice reference” into a primary source of engineering evidence.

Engineers also rely on clarity because most meaningful studies are team efforts. A grid engineer, a protection specialist, and a power electronics designer often share the same multi domain model, each focusing on different parts of the system. If interfaces, naming conventions, and assumptions are opaque, every handoff adds friction, confusion, and rework. When the model is transparent, contributors can inspect, question, and refine parts of the system without breaking results that others depend on.

How multi domain modelling improves system representation accuracy

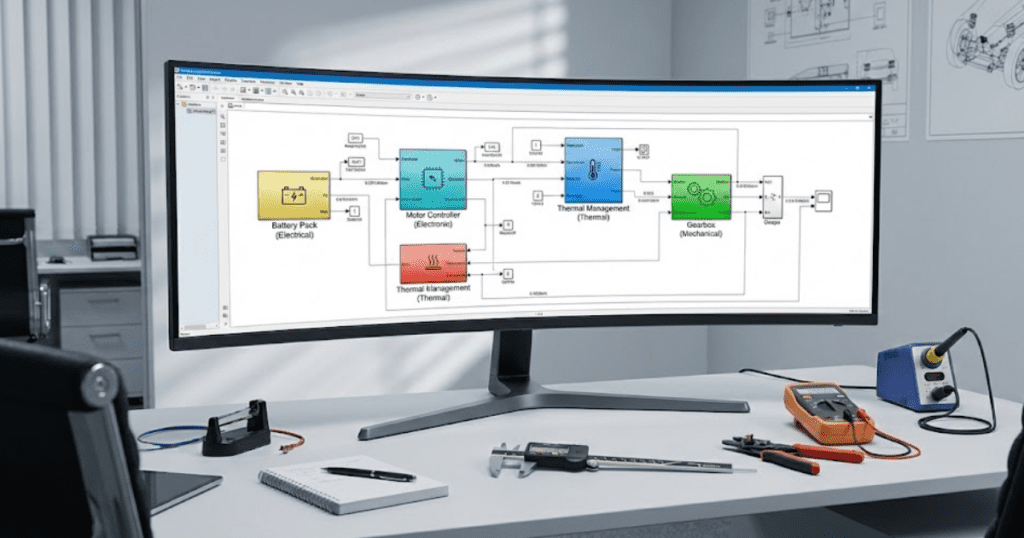

Multi domain modelling connects electrical, mechanical, control, and communication behaviour inside one coherent system representation. When that connection is handled carefully, the model captures cross-domain effects that are often missed in single-domain approximations. This directly improves how you estimate stress on components, timing of events, and the interactions between converters, lines, and controllers. A more complete view reduces the gap between simulated test cases and what you see once hardware is online.

- Consistent physics across domains: A well-built multi domain model uses equations and parameters that align across all domains, instead of treating each subsystem as a black box. This consistency ensures that torque, voltage, current, and power all follow the same conservation principles, which stabilizes results during stressed conditions.

- Accurate interface signals: Electrical, mechanical, and control interfaces often carry information between domains, such as torque feedback, DC-link voltage, or PLL frequency estimates. Careful modelling ensures that scaling, units, and delay are all correct, which prevents subtle errors that can distort behaviour.

- Shared time resolution and solver settings: When multi domain modelling uses appropriate time steps and solver choices, fast switching effects, mechanical transients, and control loops remain aligned. This shared resolution allows you to study events like faults, switching sequences, and oscillations without hiding interactions behind numerical smoothing.

- Configurable levels of detail: Effective multi domain models offer both high-fidelity detail and simplified representations for different study goals. You might use a detailed switching converter for harmonic analysis, and a simplified average model for long-duration system studies, while keeping the same signal interfaces and parameters.

- Explicit representation of delays and latencies: Control and communication elements often introduce delays that matter for stability and protection. Multi domain modelling that includes these delays explicitly gives you more accurate stability margins and more realistic response to faults and setpoint changes.

- Consistent parameter sets across domains: Parameters such as rated power, base voltages, inertia constants, and controller gains should line up across electrical and mechanical domains. When multi-domain modelling keeps those parameter sets coordinated, your system representation behaves as a single, coherent model instead of a collection of parts glued together.

Improved accuracy in multi domain modelling does not come from adding complexity for its own sake. It comes from aligning equations, parameters, and interfaces so your system representation behaves like a single physical system. This level of care lets you trust that test cases reflect the real behaviour you care about, not hidden numerical tricks. Over time, that trust saves effort during validation, reduces rework when requirements change, and supports stronger engineering decisions.

How to represent component interaction clearly across linked domains

Component interaction sits at the centre of multi domain modelling because no subsystem acts alone once a network is energized. A converter interacts with a feeder, which interacts with protection, which in turn interacts with mechanical loads and control systems. Clear representation of those relationships requires more than just connecting blocks with lines in a diagram. You need a deliberate approach to naming, interface signals, and documentation so anyone who opens the model understands how power and information flow from place to place.

Component interaction also depends on drawing clear boundaries between what each subsystem is responsible for. A line model should expose voltages and currents, not bury them behind internal scaling conventions that differ from the rest of the system. A controller should receive signals in well-defined units, with carefully documented filtering and delays that match your assumptions. When every component clearly announces what it expects at its terminals and what it provides in return, the full model becomes easier to test, modify, and explain.

Practices that help teams build clarity into system representation

Multi domain modelling becomes easier to manage when your team uses shared habits that support model clarity. These habits affect choices as simple as naming a signal and as deep as structuring entire subsystems. Strong practices make the model understandable for new students in a teaching lab, while still serving experienced engineers doing complex studies. The same practices also help you avoid surprises when a model is reused years later for a new project or a new course.

“System representation reaches a higher standard when it is reviewed by more than one person.”

Standardize how you name and group components

Consistent naming is often the first clue that a system representation will be easy to work with. When components, signals, and subsystems follow a standard pattern, you can guess the purpose of a block from its name before you inspect its internals. A clear convention might encode domain (electrical, mechanical, control), phase, or voltage level, which cuts down on confusion when several similar signals appear in a scope. This practice helps new team members orient themselves quickly, especially in teaching or research settings.

Grouping components into logical subsystems also supports clarity. You might group all grid-side equipment, converter hardware, and controllers into separate top-level blocks with consistent interfaces. That structure mirrors how engineers often divide responsibilities in projects, which makes model reviews and handoffs less painful. Clear grouping also helps you isolate issues, because you can focus on one logical subsystem at a time without losing track of the full model.

Anchor models in physical equations and operating points

System representation improves when each submodel reflects the underlying physics rather than only matching a set of test curves. When you relate equations directly to known principles, such as power balance or mechanical torque relationships, you gain a more robust basis for extrapolating beyond the exact conditions used for tuning. This physical grounding is especially important in academic settings where the goal is understanding, not just matching a specification. It also supports clear teaching, because students can map equations in the model to what they learned in class.

Operating points provide another anchor for clarity. When you document and compute operating points explicitly, such as nominal voltages, currents, speeds, and angles, you create a shared reference for studying disturbances. That reference helps teams check whether controllers are tuned around realistic conditions and whether equipment ratings are respected. Operating point data also allows you to assess if model responses to faults, switching actions, or setpoint changes remain within expected ranges.

Separate control, power, and auxiliary subsystems cleanly

Control logic often explodes in complexity as projects grow, which can hide errors and obscure the relationship between control decisions and physical outcomes. Clear separation of control, power, and auxiliary subsystems makes it easier to read and reason about each part. When control systems live in dedicated sections with clear input and output signals, you can review logic, adjust parameters, or prototype new strategies without disturbing the power stage. This separation also helps students learn the difference between what the controller is trying to do and what the system actually does.

Auxiliary subsystems, such as measurement, filtering, and monitoring, deserve the same level of clarity. These parts often create delays, noise, or scaling effects that influence protection and control behaviour significantly. Placing them in distinct blocks with documented assumptions helps you track their impact and adjust them consciously. That structure also reduces the risk that someone accidentally edits a measurement block while assuming they are changing core control logic.

Use consistent parameter documentation and units

Parameter clarity is one of the simplest ways to strengthen system representation, yet it is often overlooked when timelines are tight. Engineers and students may enter values directly into blocks without documenting where they came from, which units they use, or how they relate to equipment ratings. Consistent documentation inside the model, including comments, parameter tables, and references to data sheets, changes this situation. It creates a permanent record of modelling choices that survives personnel turnover and project shifts.

Units are equally important for model clarity. Mixing per-unit values with physical units, or failing to specify base values, quickly leads to mistakes that can distort results. When teams agree on unit conventions and enforce them across all domains, they remove a large source of silent error. Consistent units also make it easier to reuse submodels across projects, since you do not need to rediscover scaling choices every time.

Review models as a team, not alone

System representation reaches a higher standard when it is reviewed by more than one person. Individual engineers tend to focus on their own sections, which makes it easy to miss assumptions at interfaces, or to overlook side effects of a parameter change. Team reviews create space to walk through multi domain interactions, challenge assumptions, and align expectations about expected test outcomes. That process helps catch issues early and spreads understanding across the group.

Regular reviews also support mentoring and teaching. Students and early-career engineers gain insight into how experienced colleagues read and critique models, which accelerates their learning. For research and industry teams, scheduled review sessions turn model clarity into a shared responsibility rather than an individual preference. Over time, those sessions encourage consistent habits that make every new system representation more transparent than the last.

| Practice | Why it helps clarity | Practical outcome |

| Standardized naming and grouping | Makes structure and purpose easy to recognize | Faster onboarding and simpler navigation through large system models |

| Physics-based equations and operating points | Aligns models with physical behaviour | More reliable extrapolation beyond initial test conditions |

| Separation of control, power, and auxiliary subsystems | Keeps responsibilities distinct | Easier debugging and safer edits to specific parts of the system |

| Consistent parameter documentation and units | Reduces hidden assumptions and scaling errors | Reusable submodels and fewer surprises during validation |

| Team-based model reviews | Spreads understanding and exposes blind spots | Stronger shared ownership of model clarity across projects and courses |

Practices like these do not require new tools so much as shared agreements within your lab or engineering group. Once those agreements exist, they guide every new multi domain model you build, regardless of system size or complexity. Over time, the result is a set of system representations that feel familiar, even when the underlying equipment or study goal changes. That familiarity supports faster studies, safer experimentation, and clearer engineering communication.

Factors that define a reliable model for system interaction studies

System interaction studies test how parts of a system respond to each other under stress, so they place heavy demands on model quality. A reliable model must react sensibly when parameters are pushed, faults are injected, or operating points move away from nominal. Reliability here does not mean perfection in every detail, but consistent behaviour that reflects the physics you care about within agreed limits. Clear criteria for reliability help teams decide when a model is ready for use in analysis, teaching, or project decisions.

- Verified parameter sources: Reliable models trace their parameters back to trusted sources, such as data sheets, test reports, or agreed specifications. Clear links to those sources make it easier to check, update, and justify modelling choices during reviews.

- Stable numerical behaviour: Reliable models remain stable under reasonable variations in time step, solver settings, and disturbance magnitude. If small numerical changes produce wildly different responses, it becomes difficult to trust conclusions from interaction studies.

- Consistent behaviour across scenarios: Reliable system representation produces responses that vary smoothly as test conditions change, such as different load levels or fault locations. Sudden, unexplained shifts in results often signal modelling issues rather than genuine system behaviour.

- Transparent assumptions and simplifications: Every multi domain model simplifies reality in some way, for example through ideal switches or neglected losses. Reliability improves when these simplifications are clearly documented, so users know where the model is strong and where caution is needed.

- Validated against measurements or reference models: Reliable models match measured data, higher-fidelity simulations, or well-accepted benchmark results within defined tolerances. This validation step anchors system interaction studies in evidence instead of intuition alone.

- Clear interface definitions between subsystems: Interaction studies depend on correct exchanges of power and information between components. Reliable models have well-defined interface signals, units, and directions at every subsystem boundary, which limits mismatches and misinterpretations.

- Reproducible test setups: Reliable models come with documented test configurations, including initial conditions, parameter sets, and run scripts. This reproducibility allows different users to repeat studies and obtain the same results, which strengthens trust in the model.

Factors like these provide a practical checklist when deciding if a model is ready for serious system interaction work. You gain a consistent way to judge new models, bring students into an established workflow, and compare different modelling approaches fairly. Over time, these criteria also support continuous improvement, since every new project benefits from lessons learned on earlier studies. That steady refinement builds a modelling culture where reliability is expected, not accidental.

Steps engineers use to prepare models for consistent testing results

Consistent testing results start long before you press the run button. Engineers who specialise in system studies follow a series of preparation steps that align objectives, model scope, parameters, and test procedures. Those steps help reduce hidden variability between runs and across users, which improves confidence in both teaching and project work. Thoughtful preparation also saves time, because you spend less effort chasing inconsistent outcomes.

Clarify objectives and test cases

Preparation begins with a clear set of objectives and test cases. You might focus on fault ride-through, converter start-up behaviour, or coordination between protection and control systems, but each focus demands different operating points and measurement signals. Writing down these objectives ahead of model changes keeps scope under control and guides which details really matter. It also gives students and colleagues a shared reference for what “success” looks like.

Test cases should then be defined in specific, measurable terms. That can include fault type and location, load levels, converter setpoints, and time windows for analysis. Describing each case explicitly reduces the risk that two users run slightly different scenarios while assuming they are the same. Clear test descriptions also help you reuse setups across semesters or projects without re-deriving conditions from memory.

Scope and simplify the system thoughtfully

Once objectives are clear, engineers decide how much of the full system must be represented to answer the main questions. Including every possible detail might feel safe, but it often leads to unwieldy models that are difficult to understand and maintain. Purposeful scoping keeps only the portions of the network, converter hardware, and control logic that actually influence the study results. This careful selection preserves the interactions that matter while avoiding unnecessary complexity.

Simplification plays a similar role. When you replace a detailed model with a simpler representation, such as an aggregate load or averaged converter, you should record the reasons for that choice. Doing so helps others understand how the simplified model should be used and what conditions might break its assumptions. Students also benefit from seeing how engineers decide which details to keep and which to omit when time or computational resources are limited.

Calibrate and validate submodels before full-system tests

Engineers often calibrate submodels individually before combining them into a full multi domain system. That might mean tuning a converter against manufacturer curves, matching a line model to known impedances, or validating a controller against a reference response. Working at the submodel level makes it easier to isolate issues and confirm that each piece behaves sensibly on its own. Once those checks pass, you have a more solid foundation for system-level interaction studies.

Validation then moves to small subsystems that capture key interactions, such as a converter connected to a short feeder with its controller. These smaller testbeds help you evaluate stability, frequency response, and protection behaviour without the complexity of the entire network. When each subsystem passes agreed validation criteria, the full model inherits that confidence. This approach also gives students manageable test cases they can explore without being overwhelmed.

Freeze configurations and share test templates

After calibration and validation, engineers often “freeze” certain configurations to keep testing consistent. Frozen configurations might include parameter sets, solver settings, and test sequences that are known to produce stable, meaningful results. Recording these choices in a shared document or script prevents accidental changes that would alter outcomes without clear justification. This practice is especially important when multiple users rely on the same model for different studies.

Test templates offer a practical way to share those frozen setups. A template might preconfigure fault locations, control setpoints, and measurement scopes for each study. Users can then clone the template, adjust only the aspects relevant to their comparison, and keep other conditions aligned implicitly. This approach boosts reproducibility within teams and classrooms, while still leaving room for exploration and adaptation.

Effective preparation brings structure and predictability to system testing. When objectives, scoping decisions, calibration steps, and test templates are all documented, your model becomes more than a personal tool. It turns into a shared asset that students, engineers, and researchers can trust for consistent results. That shared trust is a key ingredient in building confidence around the multi domain modelling practices your group depends on.

“Reliable multi domain models can feel like the difference between guessing and actually seeing how your system behaves.”

How model clarity supports debugging, learning, and engineering confidence

Model clarity has a direct impact on how quickly you can debug strange behaviour and how well you can explain results to others. When system representation is tidy, documented, and grounded in physics, you are less likely to get stuck wondering what a mysterious block or parameter actually does. This clarity is crucial for students, who often learn modelling and system theory at the same time. It also supports senior engineers who need to move quickly from symptom to cause in complex studies.

- Faster root-cause analysis during debugging: Clear models make it easier to trace signals from outputs back to sources, review parameters, and isolate where behaviour diverges from expectations. This structure shortens debugging sessions and reduces frustration when tests do not match intuition.

- Better learning outcomes for students: When model clarity matches teaching goals, students can link diagrams and equations to concepts from lectures and labs. They spend more time reasoning about system behaviour and less time guessing what a block might be doing.

- Higher confidence in test conclusions: Engineers are more willing to trust results when they understand how model elements interact and where approximations exist. That confidence helps teams use simulation outcomes in design reviews and technical discussions without hesitation.

- Safer experimentation with extreme scenarios: Clear system representation allows you to push models into unusual conditions, such as severe faults or extreme parameter variations, while still understanding why the system reacts a certain way. This understanding supports safer planning for hardware tests and field commissioning activities.

- Easier onboarding for new team members: New engineers and researchers join projects more smoothly when models they inherit are readable and documented. Model clarity reduces ramp-up time, which in turn lowers the risk that someone introduces errors while trying to get oriented.

Model clarity, therefore, is not just a stylistic preference. It shapes how users build understanding, make engineering judgments, and communicate insights within their teams. Clear system representation builds a shared mental picture of the system that survives staff changes, new study topics, and evolving requirements. That shared picture is part of what makes simulation an enduring partner for confident engineering work.

How SPS SOFTWARE supports clear and reliable multi domain modelling

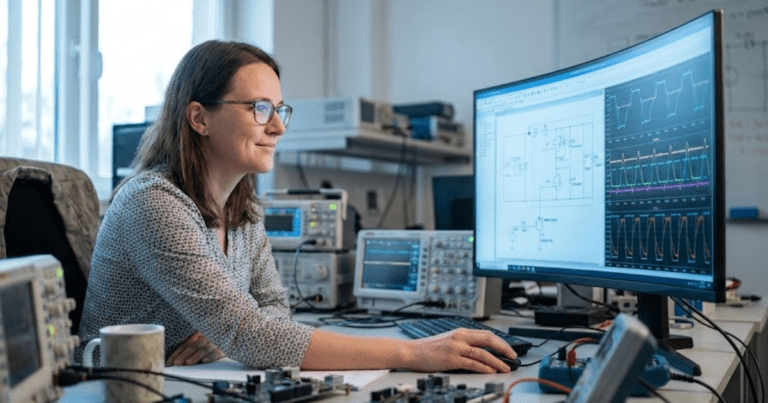

SPS SOFTWARE focuses on helping engineers, educators, and students create multi domain models that are transparent, physics-based, and ready for system studies. The platform offers component libraries for power systems and power electronics that line up naturally with how you think about lines, transformers, converters, and controllers. Each component exposes parameters in a clear, organized way, which makes it easier to connect data sheets and specifications to the model. Flexible options for modelling detail let you choose between switching-level representation and averaged behaviour while keeping interfaces consistent.

These qualities support your daily tasks in very concrete ways. A utility engineer can build a feeder with embedded converters and protection, then study faults and switching events without fighting the modelling framework. A teaching lab can use the same tools to walk students from simple single-line diagrams to full multi domain models that show how control, power, and network effects fit together. Research teams can share open models that colleagues can inspect, modify, and extend, instead of relying on opaque black boxes. These strengths make SPS SOFTWARE a dependable partner for teaching, research, and engineering work.